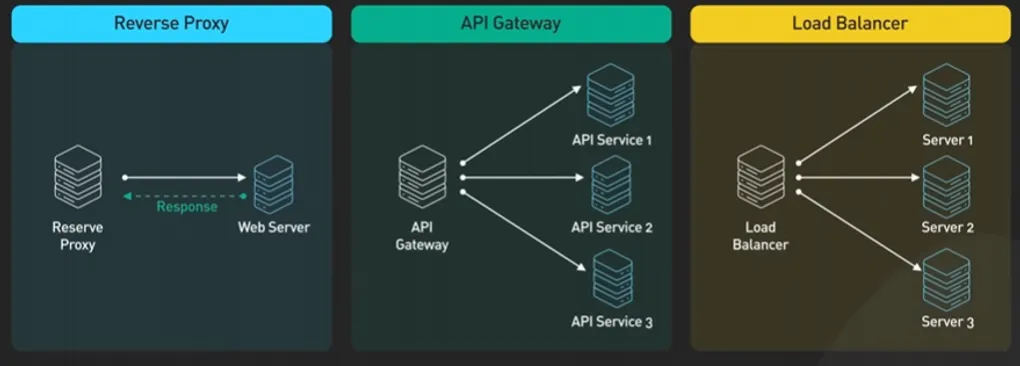

Reverse Proxy vs Load Balancer vs API Gateway

Modern backend architectures often use reverse proxies, load balancers, and API gateways.

They are related, often used together, but they solve different problems at different layers.

This article explains:

- What each component does

- How they differ

- When to use each one

- Common load balancing algorithms

Reverse Proxy

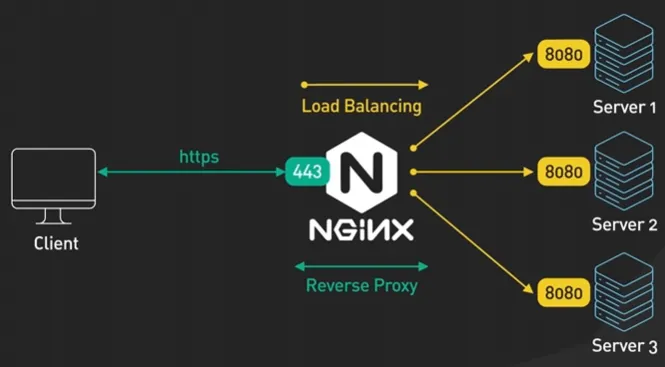

A reverse proxy sits in front of one or more backend servers and forwards client requests to them.

Clients never communicate directly with the backend services.

Responsibilities

- Acts as a single entry point

- Hides internal services and network topology

- TLS/SSL termination

- Caching and compression

- Basic authentication

- Request routing

What it does not focus on

- Advanced traffic distribution strategies

- API-level concerns like rate limiting or versioning

Example

Client → Reverse Proxy → Application ServerWhen to use it

- You want to hide backend services

- You need SSL termination

- You want caching or static content delivery

- You want simple routing based on path or host

Load Balancer

A load balancer distributes incoming traffic across multiple instances of the same service to improve scalability, availability, and fault tolerance.

Responsibilities

- Distribute traffic across service replicas

- Perform health checks

- Detect failures and reroute traffic

- Enable horizontal scaling

- Support zero-downtime deployments

Example

Client → Load Balancer → App Instance 1

App Instance 2

App Instance 3Load Balancing Algorithms

Load balancers use algorithms to decide which backend instance should handle each request.

Round Robin

Requests are distributed sequentially across all available instances.

Request 1 → Instance A

Request 2 → Instance B

Request 3 → Instance C

Request 4 → Instance APros

- Simple

- Even distribution

Cons

- Ignores current load

Best for

- Stateless services

- Uniform request cost

Least Connections

Each request is routed to the instance with the fewest active connections.

Pros

- Adapts to uneven workloads

- Better for long-running requests

Cons

- Slightly more complex

- Requires tracking active connections

Best for

- APIs with variable processing time

- WebSockets or long-lived connections

IP Hash

The client’s IP address is hashed to decide which backend handles the request.

hash(client_ip) → backend instancePros

- Session persistence (sticky sessions)

- Same client consistently hits the same backend

Cons

- Uneven load distribution

- Scaling up/down can reshuffle traffic

Best for

- Stateful applications

- Legacy systems without shared session storage

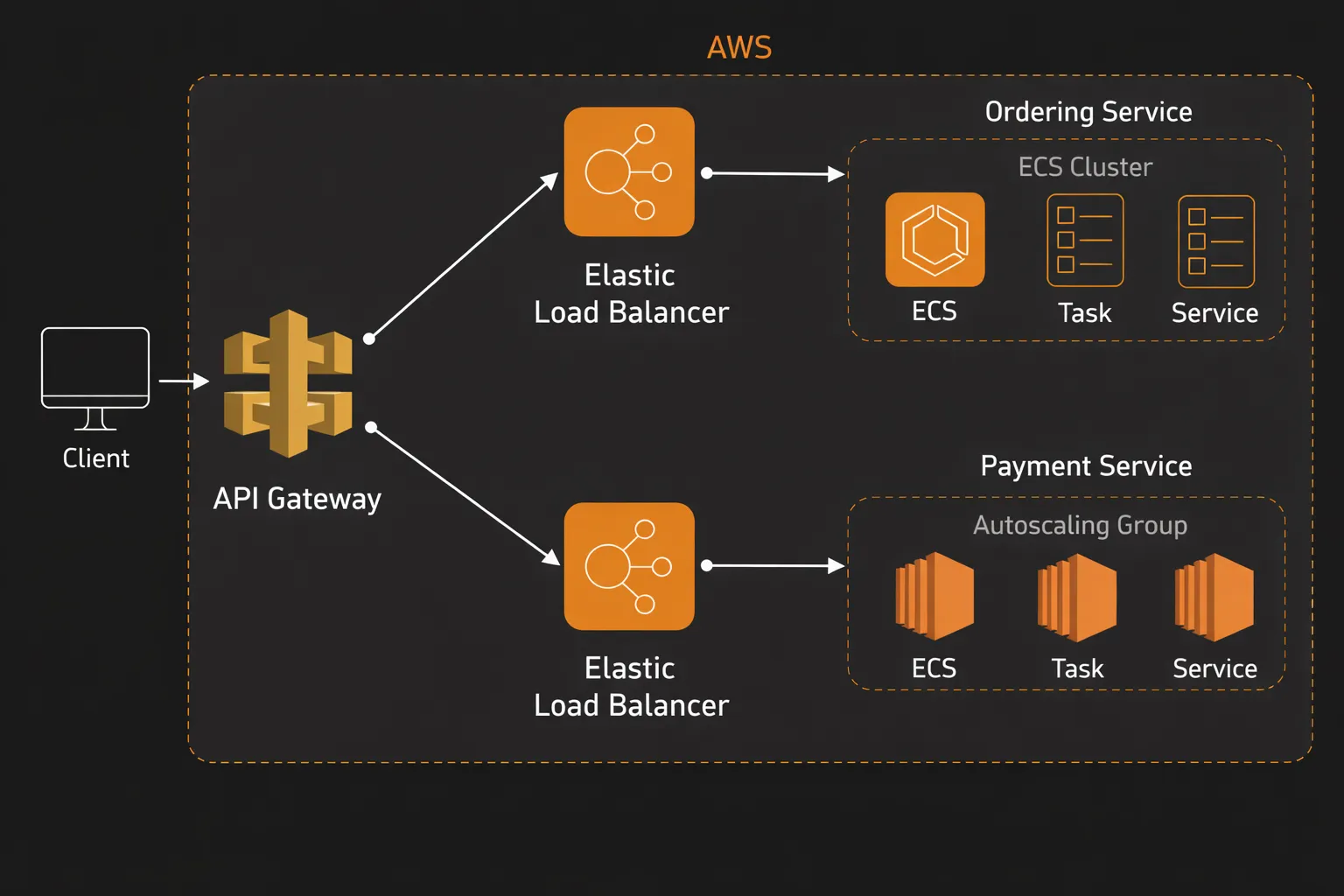

API Gateway

An API Gateway is a specialized entry point designed for APIs, especially in microservices architectures.

It often includes reverse proxy and load balancing capabilities, but adds API-level intelligence.

Responsibilities

- Authentication and authorization (JWT, OAuth2, API keys)

- Rate limiting and throttling

- Request and response transformation

- API versioning (

/v1,/v2) - Routing to multiple backend services

- Monitoring, logging, and analytics

Example

Client → API Gateway

├── Auth Service

├── Orders Service

└── Payments ServiceWhen to use it

- You expose public or partner APIs

- You run microservices

- You need security, rate limiting, and versioning

- You want a clean separation between clients and services

Comparison Table

| Feature | Reverse Proxy | Load Balancer | API Gateway |

|---|---|---|---|

| Entry point | ✅ | ✅ | ✅ |

| Distributes traffic | ⚠️ Basic | ✅ | ✅ |

| Hides backend | ✅ | ❌ | ✅ |

| API authentication | ❌ | ❌ | ✅ |

| Rate limiting | ❌ | ❌ | ✅ |

| Request transformation | ❌ | ❌ | ✅ |

| Best suited for | Infrastructure | Scalability | APIs & Microservices |

Mental Model

- Reverse Proxy → Traffic director

- Load Balancer → Traffic distributor

- API Gateway → Traffic manager with rules

Common Real-World Setup

These components do not compete — they complement each other and are often used together in modern systems.